If you give a Moose an Apple Watch (unfinished)

If you give a Moose an Apple Watch, then they’ll want to use it to automate their Alexa smart-home.

Which will require you to implement the Amazon Alexa Voice Service in a Swift and SwiftUI Apple Watch app.

So that the moose can say things like Turn on my electric blanket when out in the wilds, and be nice and cosy by the time they tuck up in bed at the end of the day.

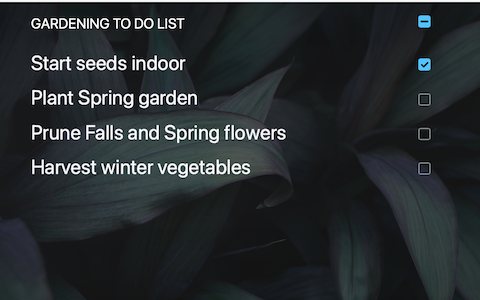

Which will make the moose want to be able to add items to their shopping list from their watch as they are drifting off to sleep, thinking about their day, and view their shopping list to see what is already on it.

So you’ll implement the original Alexa visualization mechanism called Templates in SwiftUI.

Which will leave the moose wondering why they can’t check items off their shopping list, while ruminating on what they accomplished today, which requires you to implement support for the Alexa Presentation Language (APL).

In SwiftUI.

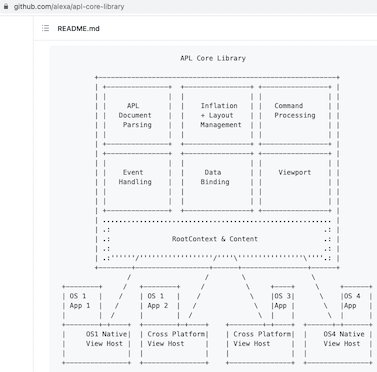

Which means you’ll discover that Amazon have a fantastic library you can use to parse APL documents called the APL Core Library.

Which you’ll find is written in C++, which you know knew inside-out.

In the early 1990s.

Which will remind you that you can’t call C++ directly from Swift.

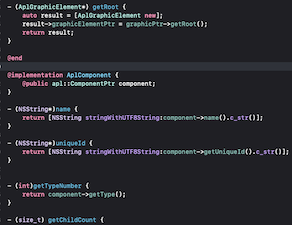

Which will send you into the delightful world of Objective C for the very first time, because Swift can talk to Objective C which can talk to C++.

Which will make you write Objective C wrappers for all of the key APL Core Library C++ classes.

Which will help you start rendering an APL document in your SwiftUI iOS app, but it won’t display properly.

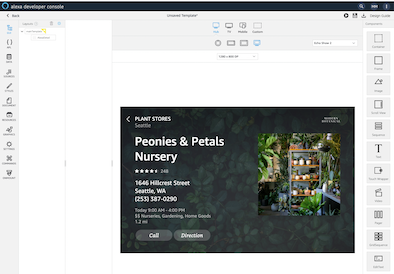

Which will bring the APL Authoring Tool to your attention to help understand how APL should render, although you’ll still not understand why it does not render properly.

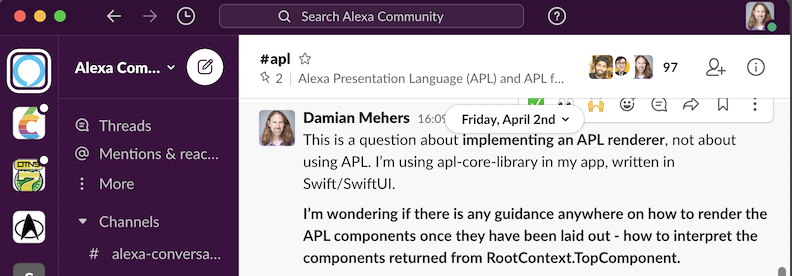

Which will lead you to asking on the Alexa Community Slack for help and someone will point you to the APL ViewHost Web renderer for the output of the APL Core library.

Which is written in TypeScript, which you’ve used in the past, and will help you get further, but not quite there, until you become intimately familiar with how SwiftUI does it’s stuff.

Which will get you to the point where you can kind of render an APL document in a SwiftUI view,

Which will make you happy until you discover that part of APL support is being able to render Scalable Vector Graphics (SVG) path data.

Which will make you realize that SwiftUI has no native SVG support.

Which will lead you to take a look at the Macaw library, but discover it isn’t suitable for you because you need watchOS support.

Which will make you write your own Swift/SwiftUI SVG Renderer which should run on iOS, macOS, watchOS and tvOS.

Which will help you interpret paths such as M48,24c0,13.255-10.745,24-24,24S0,37.255,0,24S10.745,0,24,0S48,10.745,48,24z (a circle) with the help of an online viewing tool

Which will lead you into the joys of Bezier Curves, which will make you wish you’d paid more attention to mathematics in school, and learn how to calculate the first control point for smooth curves.

Which will make you want to share your SwiftUI SVG path data renderer done since you can’t be the only one that needs that, and it is a nice independent component.

Which will make you learn for the first time how to publish a Swift Package, and make all the usual initial mistakes. You call it SvgVectorView because naming is hard.

Which will be there for everyone to see in the GitHub history, but at least you’ve learned how to publish Swift packages, which you’ve always wanted to learn.

Which will make you lose all the context from what you are really trying to do, which is support APL in your app.

Which will bring you back to integrating your new SwiftUI SVG support into your app.

Which will make you realize things still won’t render properly

Which will cause you to remember the measure callbacks the the APL Core Library makes back into your code to measure how much screen space text will take.The ones that were left to the default example callbacks.

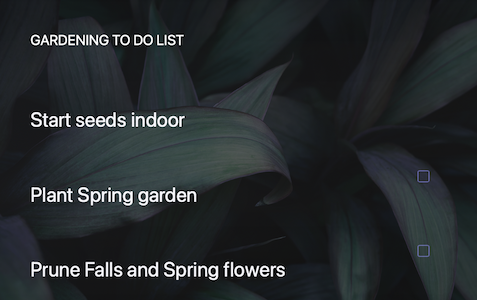

Which, when updated, now show it rendering better. But those Vector graphics still don’t look right.

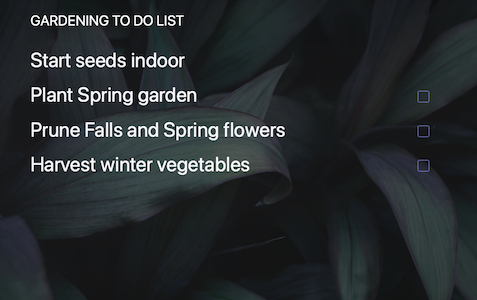

Which will lead you to discover that you need to ignore the alpha channel for SVG fills, meaning that it will display correctly:

Which will make you want to add interactivity which means detecting when the user clicks the screen and sending the screen coordinates to the APL Core Library.

Which will lead you to discover that the SwiftUI TapGesture doesn’t provide the tapped co-ordinates.

Which will help you find a hack that uses a DragGesture to find the tapped coordinates. You’ll sincerely hope this won’t mess up your future attempts to detect dragging.

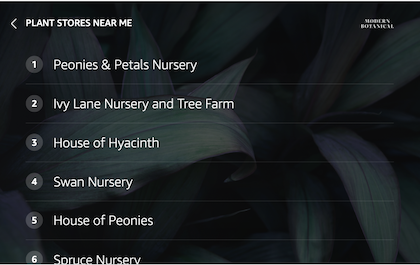

Which will delight you when all of a sudden you’ll find that tapping actually works in your APL document rendered in SwiftUI and be irrationally optimistic that the end is near:

Which will make you try to render an APL document that scrolls and it won’t look good at all:

Which is certainly nothing like it is supposed to:

Which will keep you hacking away until it scrolls properly

Which will lead you to the next step of injecting commands, such as a command to speak the list

Which will bring to mind some key tenets of SwiftUI, which is more about describing what you want rather than how you want to do it - specifically that you can’t scroll to a specific offset which is what APL Core expects

Which will take you to a hack that you are not proud of, but which works - when you receive a Speak command you’ll look for the deepest child of the component being spoken and scroll that to into view

Which will almost lead you to declare victory, but when testing “What is the weather” you’ll realize that templates (which APL replaces) are still being used for the weather

Which means you’ll still need to support templates

Which makes you do some more tests on other devices

Which will make you think “enough already” and you’ll ship an update in TestFlight with support for APL.

Which will make you realize that some things don’t render properly “Set a 10 minute timer” gives an error

Which tells you that you are still at the start of the journey.

Note This post is unfinished. The “real” post is here

You can leave comments about this post here