Bringing Alexa to the Apple Vision Pro

Voice in a Can

In 2018 while on my daily train commute I started playing with the Alexa Voice Service (AVS), which provides a network API to Alexa.

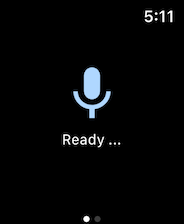

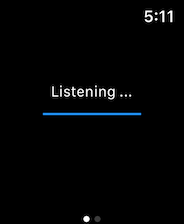

First I got an Alexa client running on my iPhone, then I got it running on my Apple Watch. Yes! I could now turn on my electric blanket from my Apple Watch.

It was painfully slow. Not only the development (I was using a 12” MacBook) but also when running it. But it worked.

Since then it has been moderately successful, and to my surprise no one else released an Alexa app for the Apple Watch. Not even Amazon.

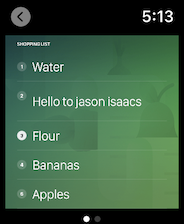

I eventually added support for the Alexa Presentation Language, even on the tiny Apple Watch Screen, bridging Amazon’s APL Core C++ library with Swift.

The Apple Vision Pro

I’d already done some experiments using Unity, but when the Apple Vision Pro was announced I decided to take things more seriously.

But what value is there in bringing Voice in a Can to “Spacial Computing”?

Putting a virtual echo with you in an immersive environment, a dark void with just you and the echo, would make no sense. It would just be you and Alexa, and let’s be real, it’s not that great a conversationalist.

However one style that Apple supports for app windows is volumetric meaning that a window is not a flat plane, but instead has depth. You can insert 3D objects in that space, as part of your app’s UI. That was interesting.

What if you could settle into any real-world space, and tuck a virtual Alexa away on a shelf, and carry on with other work in your headset. Then when you want to use it, just glance at it, tap your fingers and speak…

I started building a virtual echo using Reality Composer Pro, but ended up just coding it by hand:

func buildEcho() -> Entity {

let height: Float = 0.1

let radius:Float = 0.1

let rimHeight: Float = 0.02

let rimTranslation: SIMD3<Float> = [0, 0.05966, 0]

let mainCylinder = ModelEntity(mesh: .generateCylinder(height: height, radius: radius), materials: [black])

mainCylinder.components.set(InputTargetComponent())

mainCylinder.components.set(CollisionComponent(shapes: [.generateBox(size: [0.2, radius, 0.2])]))

mainCylinder.components.set(HoverEffectComponent())

let rim = ModelEntity(mesh: .generateCylinder(height: rimHeight, radius: radius), materials: [blue])

rim.transform.translation = rimTranslation

let rimCenter = ModelEntity(mesh: .generateCylinder(height: rimHeight, radius: 0.09), materials: [black])

rimCenter.transform.translation = [0, 0.05967, 0]

mainCylinder.children.append(rim)

mainCylinder.children.append(rimCenter)

return mainCylinder

}

Once I had this model, I could place it in my app’s UI:

Wiring up tapping on the echo, changing the color of the rim, and then hooking into my existing engine was pretty straight-forward. I was able to re-use my iOS engine for grabbing microphone samples and streaming them to the AVS servers:

RealityView { content in

content.add(buildEcho())

}

.accessibilityLabel(Text(Localized.start.text))

.accessibilityAddTraits(AccessibilityTraits.startsMediaSession)

.gesture(TapGesture().targetedToAnyEntity().onEnded { _ in

if stateCoordinator.state != .connecting

&& stateCoordinator.state != .activeListening

&& stateCoordinator.state != .listening {

speechRecognizer.initiateRecognize()

} else {

speechRecognizer.stop()

}

})

The next stage was to show that Alexa Presentation Language (APL) goodness. I decided to do this by showing a completely separate window, which could be moved and navigated. I was able to re-use my existing SwiftUI views for rendering APL - Apple have done a great job of enabling UI component reuse.

3D Sound

In order for your virtual Alexa cylinder to feel real, I wanted the sound to appear to be coming from the cylinder … I didn’t want the audio to just appear ambiently from the environment.

To do this I updated the above code that builds the virtual echo to indicate that it supports spacial audio:

mainCylinder.spatialAudio = SpatialAudioComponent(directivity: .beam(focus: 0.75))

Then when I play audio I make sure to use the RealityKit AudioPlaybackController from the cylinder entity’s prepareAudio

Now when Voice in a Can speaks, the sound should sound as though it is coming from the cylinder.

Settings

I’m not sure if I’ve got this right. Voice in a Can has many settings, for example what language you use, or whether or not to show captions.

I show the settings in a separate window. But how to initiate the display of the settings?

Apple Vision Pro typically uses ornaments however I just wanted the plain, simple cylinder to be displayed … I didn’t want to clutter it up.

I ended up using a double-tap gesture on the cylinder to display settings. If I ever get my hands on a real device I’ll get a chance to see how usable this really is.

Try it out!

I’ve applied for the developer labs several times, but never got accepted … I’ve applied for the final 25th Jan one in London … fingers crossed.

Voice in a Can is under review for the App Store Release right now, and it is available as a TestFlight beta. If you have a chance to try it out please let me know what you think.